Intro

Swift Global Cluster [1] is a feature that allows Swift to span across multiple regions. By default Swift operates in a single-region mode. Setting up Swift Global Cluster is not difficult, but the configuration overhead is as usual very high. Fortunately there are application modelling tools like Juju [2] available which facilitate software installation and configuration. I have recently added support for Swift Global Cluster feature to Swift charms [3]. In the following article I will present how to setup Swift in multi-region mode with Juju.

Design

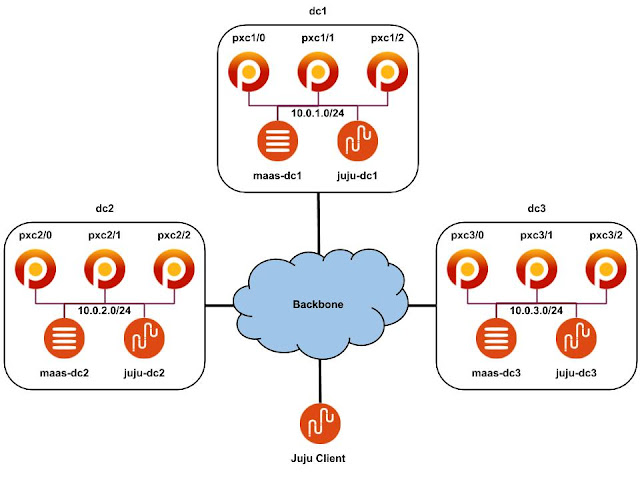

Let's assume that you have two geographically-distributed sites: dc1 and dc2, and you want to deploy Swift regions 1 and 2 in them respectively. We will use Juju for modelling purposes and MaaS [4] as a provider for Juju. Each site has MaaS installed, configured and three nodes enlisted, and commissioned in MaaS. 10.0.1.0/24 and 10.0.2.0/24 subnets are routed and there are no restrictions between them. The whole environment is managed from a Juju client which is external to the sites. The above concept is presented in the following figure:

Each node will host Swift storage services and LXD container [5] with Swift proxy service. Swift proxy will be deployed in the HA mode. Each node belongs to a different zone and has 3 disks: sdb, sdc and sdd for object storing purposes. The end goal is to have 3 replicas of the object in each site.

P.S.: If you have more than two sites, don't worry. Swift Global Cluster scales out, so can easily add another regions later on.

Initial deployment

Let's assume that you already have Juju client installed, two MaaS clouds added to the client and Juju controllers bootstrapped in each cloud. If you don't know how to do it, you can refer to Juju documentation [2]. You can list Juju controllers by executing the following command:

$ juju list-controllers

Controller Model User Access Cloud/Region Models Machines HA Version

juju-dc1* default admin superuser maas-dc1 2 1 none 2.5.1

juju-dc2 default admin superuser maas-dc2 2 1 none 2.5.1

NOTE: Make sure you use Juju version 2.5.1 or later.

The asterisk character indicates the current controller in use. You can switch between them by executing the following command:

$ juju switch <controller_name>

Before we start we have to download patched charms from the branches I created (they haven't been merged with the upstream code yet):

$ cd /tmp

$ git clone git@github.com:tytus-kurek/charm-swift-proxy.git

$ git clone git@github.com:tytus-kurek/charm-swift-storage.git

$ cd charm-swift-proxy

$ git checkout 1815879

$ cd ../charm-swift-storage

$ git checkout 1815879

Then we create Juju bundles which will be used to deploy the models:

$ cat <<EOF > /tmp/swift-dc1.yaml

series: bionic

services:

swift-storage-dc1-zone1:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 1

zone: 1

swift-storage-dc1-zone2:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 1

zone: 2

swift-storage-dc1-zone3:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 1

zone: 3

swift-proxy-dc1:

charm: /tmp/charm-swift-proxy

num_units: 3

options:

enable-multi-region: true

read-affinity: "r1=100, r2=200"

region: "RegionOne"

replicas: 3

vip: "10.0.1.254"

write-affinity: "r1, r2"

write-affinity-node-count: 3

zone-assignment: manual

to:

- lxd:0

- lxd:1

- lxd:2

haproxy-swift-proxy-dc1:

charm: cs:haproxy

relations:

- [ "haproxy-swift-proxy-dc1:ha", "swift-proxy-dc1:ha" ]

- [ "swift-proxy-dc1:swift-storage", "swift-storage-dc1-zone1:swift-storage" ]

- [ "swift-proxy-dc1:swift-storage", "swift-storage-dc1-zone2:swift-storage" ]

- [ "swift-proxy-dc1:swift-storage", "swift-storage-dc1-zone3:swift-storage" ]

EOF

$ cat <<EOF > /tmp/swift-dc2.yaml

series: bionic

services:

swift-storage-dc2-zone1:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 2

zone: 1

swift-storage-dc2-zone2:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 2

zone: 2

swift-storage-dc2-zone3:

charm: /tmp/charm-swift-storage

num_units: 1

options:

block-device: sdb sdc sdd

region: 2

zone: 3

swift-proxy-dc2:

charm: /tmp/charm-swift-proxy

num_units: 3

options:

enable-multi-region: true

read-affinity: "r2=100, r1=200"

region: "RegionTwo"

replicas: 3

vip: "10.0.2.254"

write-affinity: "r2, r1"

write-affinity-node-count: 3

zone-assignment: manual

to:

- lxd:0

- lxd:1

- lxd:2

haproxy-swift-proxy-dc2:

charm: cs:haproxy

relations:

- [ "haproxy-swift-proxy-dc2:ha", "swift-proxy-dc2:ha" ]

- [ "swift-proxy-dc2:swift-storage", "swift-storage-dc2-zone1:swift-storage" ]

- [ "swift-proxy-dc2:swift-storage", "swift-storage-dc2-zone2:swift-storage" ]

- [ "swift-proxy-dc2:swift-storage", "swift-storage-dc2-zone3:swift-storage" ]

EOF

Note that we mark all storage nodes in dc1 as Swift region 1 and all storage nodes in dc2 as Swift region 2. The affinity settings of Swift proxy application will be used to determine how the data will be read and written.

Finally we create the models and deploy the bundles:

$ juju switch juju-dc1

$ juju add-model swift-dc1

$ juju deploy /tmp/swift-dc1.yaml

$ juju switch juju-dc2

$ juju add-model swift-dc2

$ juju deploy /tmp/swift-dc2.yaml

This takes a while. Monitor Juju status and wait until all units in both models enter the active state.

Setting up Swift Global Cluster

In order to setup Swift Global Cluster we have to relate storage nodes from dc1 with the Swift proxy application in dc2 and vice versa. Moreover a master-slave relation has to be established between swift-proxy-dc1 and swift-proxy-dc2 applications. However, as they don't belong to the same model / controller / cloud, we have to create offers [6] first (offers allow cross-model / cross-controller / cross-cloud relations creation):

$ juju switch juju-dc1

$ juju offer swift-proxy-dc1:master swift-proxy-dc1-master

$ juju offer swift-proxy-dc1:swift-storage swift-proxy-dc1-swift-storage

$ juju switch juju-dc2

$ juju offer swift-proxy-dc2:swift-storage swift-proxy-dc2-swift-storage

Then consume the offers:

$ juju switch juju-dc1

$ juju consume maas-dc2:admin/swift-proxy-dc2-swift-storage

$ juju switch juju-dc2

$ juju consume maas-dc1:admin/swift-proxy-dc1-master

$ juju consume maas-dc1:admin/swift-proxy-dc1-swift-storage

Add required relations:

$ juju switch juju-dc1

$ juju relate swift-storage-dc1-zone1 swift-proxy-dc2-swift-storage

$ juju relate swift-storage-dc1-zone2 swift-proxy-dc2-swift-storage

$ juju relate swift-storage-dc1-zone3 swift-proxy-dc2-swift-storage

$ juju switch juju-dc2

$ juju relate swift-storage-dc2-zone1 swift-proxy-dc1-swift-storage

$ juju relate swift-storage-dc2-zone2 swift-proxy-dc1-swift-storage

$ juju relate swift-storage-dc2-zone3 swift-proxy-dc1-swift-storage

$ juju relate swift-proxy-dc2:slave swift-proxy-dc1-master

Finally increase the replication factor to 6:

$ juju switch juju-dc1

$ juju config swift-proxy-dc1 replicas=6

$ juju switch juju-dc2

$ juju config swift-proxy-dc2 replicas=6

This setting together with the affinity settings will cause that in each site 3 replicas of the object will be created.

Site failure

At this point we have Swift Global Cluster configured. There are two sites and each of them is acting as a different Swift region. As each node belongs to a different zone and the replication factor has been set to 6, each storage node is hosting 1 replica of each object. Both proxies can be used to read and write the data. Such cluster is highly available and geo-redundant. This means it can survive a failure of any site, however, due to an eventual consistency nature of Swift, some data can be lost during the failure event.

Failover

In case of the dc1 failure the Swift Proxy application in dc2 can be used to read and write the data in both regions. However, if dc1 cannot be recovered, swift-proxy-dc2 has to be manually transitioned to master, so that another regions could be deployed. In order to transition swift-proxy-dc2 to master execute the following command:

$ juju switch juju-dc2

$ juju config swift-proxy-dc2 enable-transition-to-master=True

Not that this should be used with an extra caution. After that another regions can be deployed based on the instructions from the previous sections. Don't forget to update the affinity settings when deploying additional regions.

[4] https://maas.io/