Intro

I have recently shown you how to replicate databases between two Percona XtraDB Clusters using asynchronous MySQL replication with Juju [1]. Today I am going to take you one step further. I will show you how to configure circular asynchronous MySQL replication between geographically-distributed Percona XtraDB Clusters. I will use Juju for this purpose again as it not only simplifies the deployment, but the entire life cycle management. So ... grab a cup of coffee and see the world around you changing!

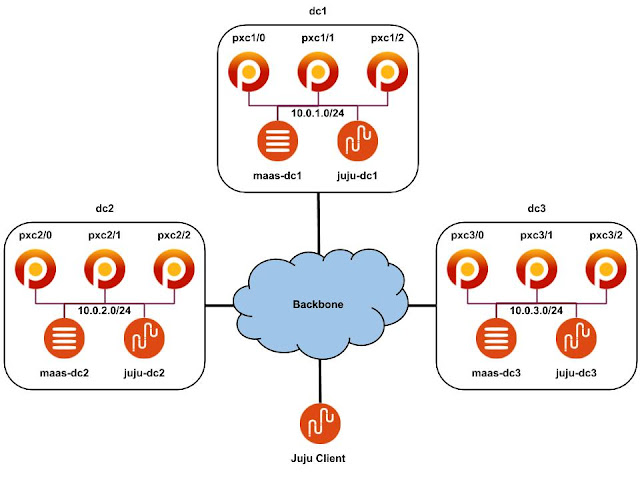

Design

Let's assume that you have three geographically-distributed sites: dc1, dc2 and dc3, and you want to replicate example database across Percona XtraDB Clusters located in each site. We will use Juju for modelling purposes and MaaS [2] as a provider for Juju. Each site has MaaS installed, configured and nodes enlisted, and commissioned in MaaS. The whole environment is managed from a Juju client which is external to the sites. The above is presented on the following diagram:

P.S.: If you have more than three sites, don't worry. Circular replication scales out, so can replicate the database across multiple Percona XtraDB Clusters.

Initial deployment

Let's assume that you already have Juju client installed, all your three MaaS clouds added to the client and Juju controllers bootstrapped in each cloud. If you don't know how to do it, you can refer to MaaS documentation [3]. You can list Juju controllers by executing the following command:

$ juju list-controllers

Controller Model User Access Cloud/Region Models Machines HA Version

juju-dc1* default admin superuser maas-dc1 2 1 none 2.3.7

juju-dc2 default admin superuser maas-dc2 2 1 none 2.3.7

juju-dc3 default admin superuser maas-dc3 2 1 none 2.3.7

The asterisk character indicates the current controller in use. You can switch between them by executing the following command:

$ juju switch <controller_name>

In each cloud, on each controller we create a model and deploy Percona XtraDB Cluster within this model. I'm going to use bundles [4] today to make the deployment easier:

$ juju switch juju-dc1

$ juju add-model pxc-rep1

$ cat <<EOF > pxc1.yaml

series: xenial

services:

pxc1:

charm: "/tmp/charm-percona-cluster"

num_units: 3

options:

cluster-id: 1

databases-to-replicate: "example"

root-password: "root"

vip: 10.0.1.100

hacluster-pxc1:

charm: "cs:hacluster"

options:

cluster_count: 3

relations:

- [ pxc1, hacluster-pxc1 ]

EOF

$ juju deploy pxc1.yaml

$ juju switch juju-dc2

$ juju add-model pxc-rep2

$ cat <<EOF > pxc2.yaml

series: xenial

services:

pxc2:

charm: "/tmp/charm-percona-cluster"

num_units: 3

options:

cluster-id: 2

databases-to-replicate: "example"

root-password: "root"

vip: 10.0.2.100

hacluster-pxc2:

charm: "cs:hacluster"

options:

cluster_count: 3

relations:

- [ pxc2, hacluster-pxc2 ]

EOF

$ juju deploy pxc2.yaml

$ juju switch juju-dc3

$ juju add-model pxc-rep3

$ cat <<EOF > pxc3.yaml

series: xenial

services:

pxc3:

charm: "/tmp/charm-percona-cluster"

num_units: 3

options:

cluster-id: 3

databases-to-replicate: "example"

root-password: "root"

vip: 10.0.3.100

hacluster-pxc3:

charm: "cs:hacluster"

options:

cluster_count: 3

relations:

- [ pxc3, hacluster-pxc3 ]

EOF

$ juju deploy pxc3.yaml

Re-fill your cup of coffee and after some time check the Juju status:

$ juju switch juju-dc1

$ juju status

Model Controller Cloud/Region Version SLA

pxc-rep1 juju-dc1 maas-dc1 2.3.7 unsupported

App Version Status Scale Charm Store Rev OS Notes

hacluster-pxc1 active 3 hacluster local 0 ubuntu

pxc1 5.6.37-26.21 active 3 percona-cluster local 45 ubuntu

Unit Workload Agent Machine Public address Ports Message

pxc1/0* active idle 0 10.0.1.1 3306/tcp Unit is ready

hacluster-pxc1/0* active idle 10.0.1.1 Unit is ready and clustered

pxc1/1 active idle 1 10.0.1.2 3306/tcp Unit is ready

hacluster-pxc1/1 active idle 10.0.1.2 Unit is ready and clustered

pxc1/2 active idle 2 10.0.1.3 3306/tcp Unit is ready

hacluster-pxc1/2 active idle 10.0.1.3 Unit is ready and clustered

Machine State DNS Inst id Series AZ Message

0 started 10.0.1.1 juju-83da9e-0 xenial Running

1 started 10.0.1.2 juju-83da9e-1 xenial Running

2 started 10.0.1.3 juju-83da9e-2 xenial Running

Relation provider Requirer Interface Type Message

hacluster-pxc1:ha pxc1:ha hacluster subordinate

hacluster-pxc1:hanode hacluster-pxc1:hanode hacluster peer

pxc1:cluster pxc1:cluster percona-cluster peer

If all units turned to the active state, you're ready to go. Remember to check the status in all models.

Setting up circular asynchronous MySQL replication

In order to set up circular asynchronous MySQL replication between all three Percona XtraDB Clusters we have to relate them. However, as they don't belong to the same model / controller / cloud, we have to create offers [5] first (offers allow cross-model / cross-controller / cross-cloud relations):

$ juju switch juju-dc1

$ juju offer pxc1:slave

$ juju switch juju-dc2

$ juju offer pxc2:slave

$ juju switch juju-dc3

$ juju offer pxc3:slave

Then we have to consume the offers:

$ juju switch juju-dc1

$ juju consume juju-dc2:admin/pxc-rep2.pxc2 pxc2

$ juju switch juju-dc2

$ juju consume juju-dc3:admin/pxc-rep3.pxc3 pxc3

$ juju switch juju-dc3

$ juju consume juju-dc1:admin/pxc-rep1.pxc1 pxc1

Finally, we can add the cross-cloud relations:

$ juju switch juju-dc1

$ juju relate pxc1:master pxc2

$ juju switch juju-dc2

$ juju relate pxc2:master pxc3

$ juju switch juju-dc3

$ juju relate pxc3:master pxc1

Wait a couple of minutes and check whether all units turned into active state:

$ juju switch juju-dc1

$ juju status

Model Controller Cloud/Region Version SLA

pxc-rep1 juju-dc1 maas-dc1 2.3.7 unsupported

SAAS Status Store URL

pxc2 active maas-dc2 admin/pxc-rep2.pxc2

App Version Status Scale Charm Store Rev OS Notes

hacluster-pxc1 active 3 hacluster local 0 ubuntu

pxc1 5.6.37-26.21 active 3 percona-cluster local 45 ubuntu

Unit Workload Agent Machine Public address Ports Message

pxc1/0* active idle 0 10.0.1.1 3306/tcp Unit is ready

hacluster-pxc1/0* active idle 10.0.1.1 Unit is ready and clustered

pxc1/1 active idle 1 10.0.1.2 3306/tcp Unit is ready

hacluster-pxc1/1 active idle 10.0.1.2 Unit is ready and clustered

pxc1/2 active idle 2 10.0.1.3 3306/tcp Unit is ready

hacluster-pxc1/2 active idle 10.0.1.3 Unit is ready and clustered

Machine State DNS Inst id Series AZ Message

0 started 10.0.1.1 juju-83da9e-0 xenial Running

1 started 10.0.1.2 juju-83da9e-1 xenial Running

2 started 10.0.1.3 juju-83da9e-2 xenial Running

Offer Application Charm Rev Connected Endpoint Interface Role

pxc1 pxc1 percona-cluster 48 1/1 slave mysql-async-replication requirer

Relation provider Requirer Interface Type Message

hacluster-pxc1:ha pxc1:ha hacluster subordinate

hacluster-pxc1:hanode hacluster-pxc1:hanode hacluster peer

pxc1:cluster pxc1:cluster percona-cluster peer

pxc1:master pxc2:slave mysql-async-replication regular

At this point you should have circular asynchronous MySQL replication working between all three Percona XtraDB Clusters. The asterisk character in the output above indicates the leader unit. Let's check whether it's actually working by connecting to the MySQL console on the leader unit:

$ juju ssh pxc1/0

$ mysql -u root -p

First check whether as a master it has granted access to pxc2 application units:

mysql> SELECT Host FROM mysql.user WHERE User='replication';

+----------+

| Host |

+----------+

| 10.0.2.1 |

| 10.0.2.2 |

| 10.0.2.3 |

+----------+

3 rows in set (0.00 sec)

Then check its slave status as a slave of pxc3:

mysql> SHOW SLAVE STATUS\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.0.3.100

Master_User: replication

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000004

Read_Master_Log_Pos: 338

Relay_Log_File: mysqld-relay-bin.000002

Relay_Log_Pos: 283

Relay_Master_Log_File: mysql-bin.000004

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 338

Relay_Log_Space: 457

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_UUID: e803b085-739f-11e8-8f7e-00163e391eab

Master_Info_File: /var/lib/percona-xtradb-cluster/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for the slave I/O thread to update it

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

1 row in set (0.00 sec)

Finally, create a database:

mysql> CREATE DATABASE example;

Query OK, 1 row affected (0.01 sec)

and check whether it has been created on pxc2:

$ juju switch juju-dc2

$ juju ssh pxc2/0

$ mysql -u root -p

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| example |

| mysql |

| performance_schema |

| test |

+--------------------+

5 rows in set (0.00 sec)

It is there! It should be created on pxc3 as well. Go there and check it.

At this point you can write to example database from all units of all Percona XtraDB Clusters. This is how circular asynchronous MySQL replication works. Isn't that easy? Of course it is - thanks to Juju!

No comments:

Post a Comment